Project 1: Adversarial machine learning

Tutor: Clémentine Maurice clementine.maurice AT irisa.fr

Context

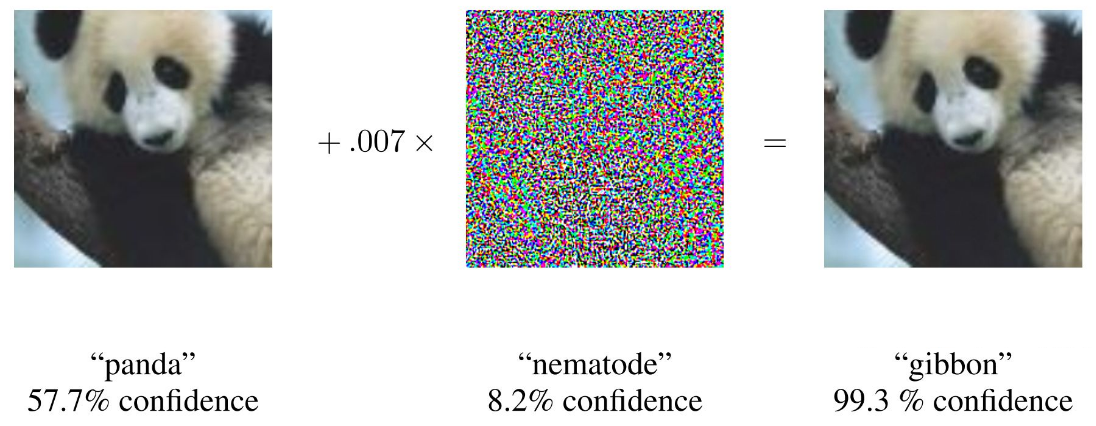

An adversarial attack consists of subtly modifying an original image in such a way that the changes are almost undetectable to the human eye. The modified image is called an adversarial image, and when submitted to a classifier is misclassified, while the original one is correctly classified.

Aim

Given a trained classifier and an example of generation of adversarial example that misclassifies the input, create a targeted attack where the image gets classified to a specific target class. Here is the code that you can run inside Google Colab: https://colab.research.google.com/drive/1lZMw4QejR1mhrCyM3sR_YWRQm3okwLB7

Project progression

- Run the given code with the trained classifier and the adversarial example generation on a few inputs.

- Play with the added noise and different inputs to see how the classifier behaves.

- Transform the misclassification attack in a targeted attack, where you can control the class of the adversarial example.

The code is there to be modified and for you to play with. Save the original version and start playing!

We’re bored

You have a few options:

- Train a classifier yourself - but beware, most of it will consist in collecting a lot of data and labeling it.

- Change the attack from a white-box attack to a black-box attack [1].

- Apply the concept of adversarial machine learning to other kinds of data, like audio [2].

- Create a robust classifier [3].

Bibliography

During the final presentation, you should summarize this paper:

- Ian J. Goodfellow, Jonathon Shlens & Christian Szegedy. Explaining and Harnessing Adversarial Examples. In: ICLR 2015. https://arxiv.org/pdf/1412.6572.pdf

Plus one of the following:

- [1] Nicolas Papernot, Patrick McDaniel, Ian Goodfellow, Somesh Jha, Z. Berkay Celik, Ananthram Swami. Practical Black-Box Attacks against Machine Learning. In Asia CCS 2017. https://arxiv.org/pdf/1602.02697.pdf

- [2] N. Carlini, D. Wagner. Audio Adversarial Examples: Targeted Attacks on Speech-to-Text. In Deep Learning and Security Workshop 2018. https://nicholas.carlini.com/papers/2018_dls_audioadvex.pdf

- [3] Florian Tramèr, Alexey Kurakin, Nicolas Papernot, Ian Goodfellow, Dan Boneh, Patrick McDaniel. Ensemble Adversarial Training: Attacks and Defenses. In ICLR 2018 https://arxiv.org/pdf/1705.07204.pdf